Wei Liu1,3, Bao-Liang Lu1,2,3,4,5

- Clinical Neuroscience Center, RuiJin Hospital, Shanghai Jiao Tong University School of Medicine, 197 Ruijin 2nd Rd., Shanghai 200020, People’s Republic of China

- Center for Brain-Like Computing and Machine Intelligence, Department of Computer Science and Engineering, Shanghai Jiao Tong University, 800 Dongchuan Rd., Shanghai 200240, People’s Republic of China

- RuiJin-Mihoyo Laboratory, RuiJin Hospital, Shanghai Jiao Tong University School of Medicine, 197 Ruijin 2nd Rd., Shanghai 200020, People’s Republic of China

- The Key Laboratory of Shanghai Education Commission for Intelligent Interaction and Cognitive Engineering, Shanghai Jiao Tong University, 800 Dongchuan Rd., Shanghai 200240, People’s Republic of China

- Brain Science and Technology Research Center, Shanghai Jiao Tong University, 800 Dongchuan Rd., Shanghai 200240, People’s Republic of China

Emotions, especially facial expressions, used to be thought of as universal all around the world: we would cry when we are sad, and we would smile when we are happy. However, you might have experienced that you do not laugh after hearing a foreign joke realizing that the joke has distinct cultural backgrounds. Emotions, therefore, seem to have both universal and culturally variable components [1][2][3]. Understanding the relationship between cultures and emotions can help us know whether emotions affect physical health in the same way across various cultures and inform us about the effectiveness of mental health interventions for patients with different cultural backgrounds. In addition, from the aspect of affective computing, a deep comprehension of cultural influences on emotions can help us build emotion recognition models for generalizing to people around the world [4].

In previous studies, cultural influences on emotions are frequent topics in psychology and neuroscience. In 1971, Ekman and colleagues found that subjects from different backgrounds could recognize facial expressions at above-chance accuracies [5]. However, in recent years, there have been many discussions on this topic [6][7][8]. Some psychologists believe that cultures shape our understanding of the meanings of various kinds of emotions, and words representing positive or negative emotions might refer to different inner experiences in different cultures [9][10][11]. Studies in psychology find a phenomenon named “in-group advantage” , which is mostly observed in experiments with pictures of facial expressions as stimuli materials. Researchers have found that subjects achieve high recognition accuracy when looking at expressions from the same culture while low accuracy when watching expression pictures of different cultures [12][13]. And this phenomenon might indicate that cultures could influence people’s perception of facial expressions.

A new research field of cross-cultural affective neuroscience (CAN) was proposed in 2012, aiming to study how different cultures influence basic emotional systems. An important assumption in CAN is that a two-way interaction exists between people and cultures [14]. In a review paper, Özkarar-Gradwohl summarized the development of mother-infant interaction studies and found that cultures influence both emotional interdependency and relatedness and emotion regulation [15]. Besides, Özkarar-Gradwohl also carried out experiments among Japan, Turkey, and Germany subjects with affective neuroscience personality scales (ANPS), and they found both similarities and differences among these three groups [16].

From the perspective of genetics, Tompson and colleagues conducted an experiment recruiting subjects who are carriers and non-carriers of 7- or 2-repeat allele of the dopamine D4 receptor gene (DRD4). Their experimental results indicated that carriers have a higher tendency to show culturally different response patterns than non-carriers. Their results also revealed a significant interaction effect between culture and DRD4 for emotional experience [17]. In the field of affective computing, most previous EEG-based emotion recognition studies either used data from the same culture or focused on recognition models rather than cultural differences [18][19][20].

We studied similarities and differences in emotion recognition with EEG and eye movements among Chinese, German, and French individuals [21]. First, we recruited native Chinese, native German, and native French subjects to participate in emotion-eliciting experiments. During the experiments, the subjects were required to watch movie excerpts of positive, neutral, and negative emotions while EEG and eye movement data were recorded simultaneously. Next, we built unimodal machine/deep learning methods with either EEG or eye movement features and multimodal machine/deep learning methods with both EEG and eye movement features under intra-culture subject-dependent (ICSD), intra-culture subject-independent (ICSI), and cross-culture subject-independent (CCSI) settings (there is no cross-culture subject-dependent setting). Finally, we compared emotion recognition accuracies of different models and analyzed topographical maps, attentional weights, and confusion matrices.

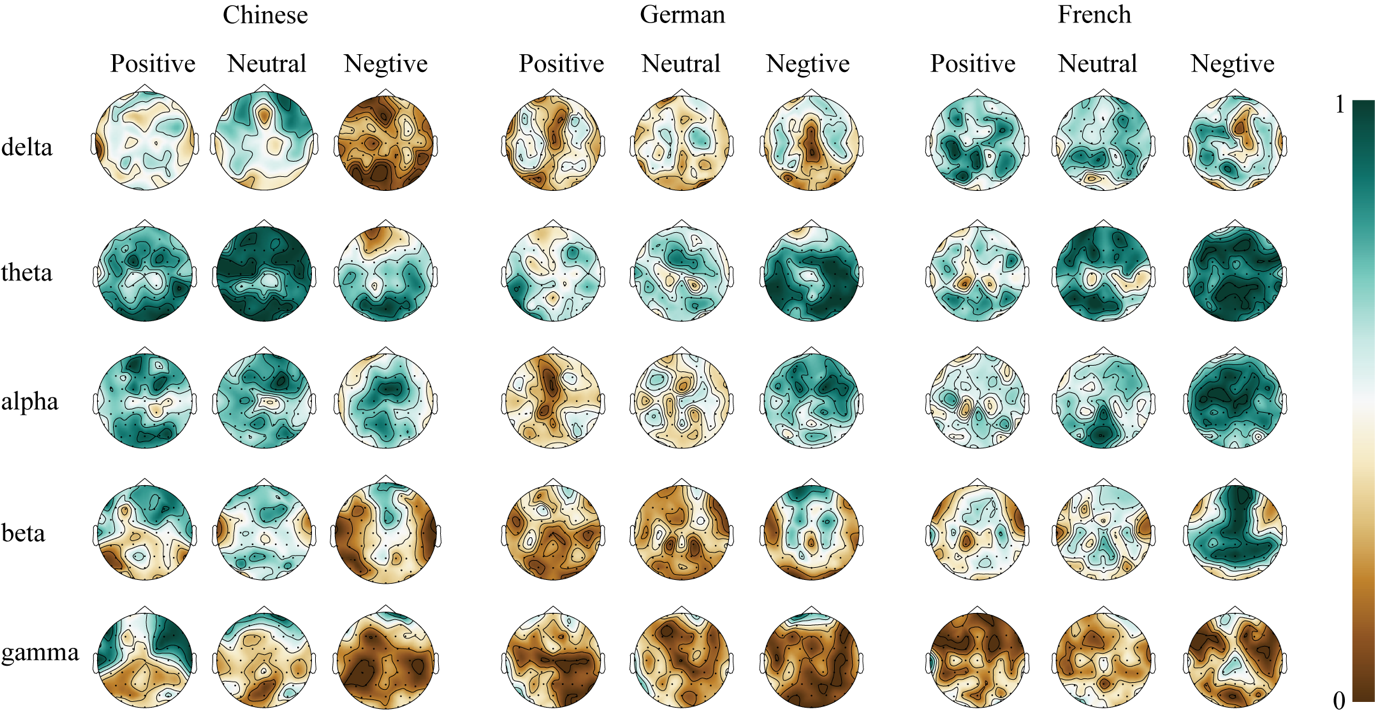

Figure 1: Topographical maps of different cultures and emotions

Figure 1: Topographical maps of different cultures and emotions

Figure 1 depicts the average differential entropy (DE) features of the delta, theta, alpha, beta, and gamma bands for each emotion category and culture. For the Chinese subjects, the gamma and beta bands had the most obvious trends. As the subjects became increasingly unhappy, the distribution area of high-energy brain regions became smaller and smaller. Positive emotions had the most prominent high-energy regions in the temporal lobe and the prefrontal lobe. The neutral emotions had smaller high-activation distributions located in the prefrontal lobe. For the negative emotion, the regions in high energy were least in all three emotions.

As for German and French subjects, the most obvious trends appeared in theta and alpha bands: positive emotions had the lowest activation, while negative emotions had the highest activation. In addition, the beta band also had similar trends for German and French subjects; namely, EEG activities were low for both positive emotions and neutral emotions but high for negative emotions.

From the topographical maps, German and French participants might share similar emotional neural patterns that are different from Chinese subjects, and these differences might be potentially affected by cultures.

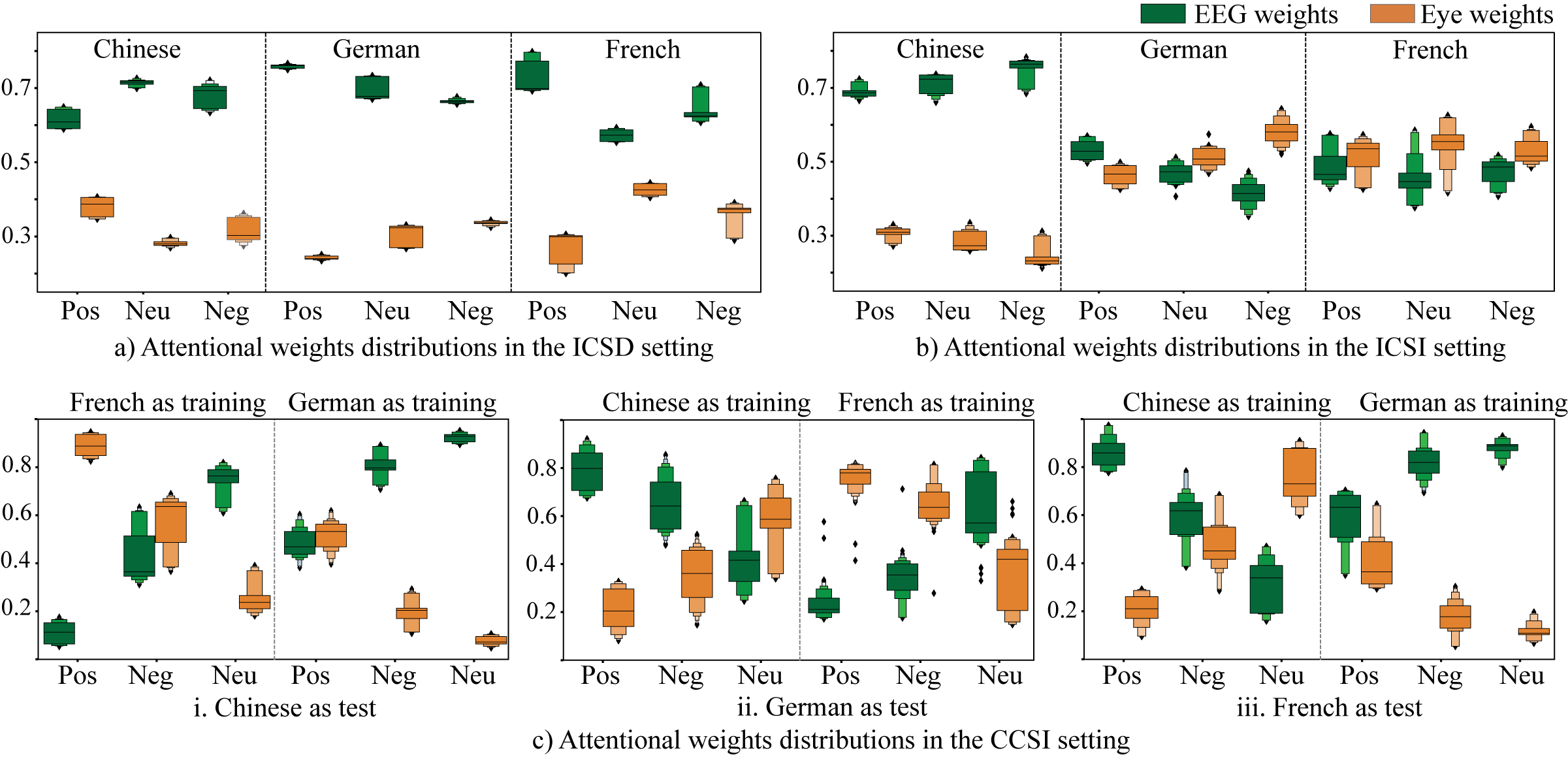

Figure 2 Attentional weight distributions in the ICSD, ICSI, and CCSI settings

Table 1 compares the emotion recognition accuracies of unimodal and multimodal methods in the ICSD setting. For unimodal classifiers, K-nearest neighbors (KNN), support vector machine (SVM), logistic regression (LR), and deep neural network (DNN) were used. For multimodal fusion methods, we compared the performance of concatenation fusion, MAX fusion, fuzzy integral fusion, bimodal deep autoencoder (BDAE) , and deep canonical correlation analysis and attention mechanism (DCCA-AM) .Our experimental results indicate that emotion recognition accuracies could be improved by fusing multiple modalities, and the multimodal fusion model based on DCCA-AM achieved the best recognition accuracies among nine methods.

Table 1 Comparing of mean accuracies (%) of different methods in the ICSD setting

| Chinese | German | French | |||||

| Unimodal | EEG | Eye | EEG | Eye | EEG | Eye | |

| KNN | 72.03 | 65.35 | 53.97 | 64.80 | 46.09 | 45.06 | |

| SVM | 83.44 | 75.49 | 65.47 | 78.72 | 64.84 | 51.26 | |

| LR | 80.53 | 73.19 | 62.74 | 75.15 | 56.98 | 46.60 | |

| DNN | 86.53 | 77.45 | 70.87 | 79.87 | 67.52 | 64.52 | |

| Multimodal | Concat | 82.43 | 74.54 | 69.07 | |||

| MAX | 80.81 | 78.10 | 62.29 | ||||

| Fuzzy | 84.22 | 83.45 | 68.31 | ||||

| BDAE | 90.58 | 88.05 | 80.32 | ||||

| DCCA-AM | 92.79 | 88.63 | 80.71 | ||||

In Figure 2, we present the distributions of attentional weights learned by DCCA-AM in ICSD, ICSI, and CCSI settings. In the ICSD setting, for Chinese, German, and French, EEG had larger attentional weights than eye movements, which was consistent with the result that EEG modality achieved higher emotion recognition accuracies than eye movement features. This result suggests that EEG might contain more emotion-related information than eye movements, which was consistent with previous studies [22]. In the ICSI setting, for German and French subjects, the eye movement modality outperformed the EEG modality in all three kinds of emotions, leading to a much more compact attentional weight distribution than that in figure 5(a). In the CCSI setting, it is obvious that German and French individuals had similar results, which were different from the results of Chinese subjects. When using Chinese subjects as the test set, EEG features became more and more important than eye movement features for positive, negative, and neutral emotions regardless of the training culture. When German subjects as the test set, the EEG modality had larger and larger weights when French was used as the training set, while the EEG modality became less important when Chinese subjects were used as the training set. Similar results existed when French subjects were the test set where German and Chinese had reverse EEG weight distributions.

German and French participants always had similar distributions of attentional weights, and the distributions in ICSI and CCSI settings were different from Chinese subjects. These observations might again indicate that German individuals and French subjects might share common emotional response mechanisms while Chinese people might have a distinct one.

Acknowledgments

This study has been collaborative research. We would like to acknowledge Siyuan Wu, Lu Gan, collected multimodal data for German and French, respectively, and Ping Guo helped in the writing of the related work. We would also like to acknowledge Ziyi Li and Wei-Long Zheng for proofreading the manuscript.

References

- Lisa Feldman Barrett, Ralph Adolphs, Stacy Marsella, Aleix M. Martinez, and Seth D. Pollak. Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological science in the public interest 20.1 (2019): 1-68. DOI: 10.1177/1529100619832930

- Alan S. Cowen, and Dacher Keltner. Semantic space theory: A computational approach to emotion. Trends in Cognitive Sciences 25.2 (2021): 124-136. DOI: 1016/j.tics.2020.11.004

- Dean Mobbs, Ralph Adolphs, Michael S. Fanselow, Lisa Feldman Barrett, Joseph E. LeDoux, Kerry Ressler, and Kay M. Tye. Viewpoints: Approaches to defining and investigating fear. Nature neuroscience 22.8 (2019): 1205-1216. DOI: 10.1038/s41593-019-0456-6

- Kristen A. Lindquist, Joshua Conrad Jackson, Joseph Leshin, Ajay B. Satpute, and Maria Gendron. The cultural evolution of emotion. Nature Reviews Psychology (2022): 1-13. DOI: 10.1038/s44159-022-00105-4

- Paul Ekman, and Wallace V. Friesen. Constants across cultures in the face and emotion. Journal of personality and social psychology 17.2 (1971): 124. DOI: 10.1037/h0030377

- F. Barrett, Debate about universal facial expressions goes big. Nature 589.7841 (2021): 202-203. DOI: 10.1038/d41586-020-03509-5

- Alan S. Cowen, Dacher Keltner, Florian Schroff, Brendan Jou, Hartwig Adam, and Gautam Prasad. Sixteen facial expressions occur in similar contexts worldwide. Nature 589.7841 (2021): 251-257. DOI: 10.1038/s41586-020-3037-7

- Elizabeth Clark-Polner, Timothy D. Johnson, and Lisa Feldman Barrett. Multivoxel pattern analysis does not provide evidence to support the existence of basic emotions. Cerebral Cortex 27.3 (2017): 1944-1948. DOI: 10.1093/cercor/bhw028

- Carl Ratner. A cultural-psychological analysis of emotions. Culture & Psychology 6.1 (2000): 5-39. DOI: 10.1177/1354067X0061001

- William Forde Thompson and Laura-Lee Balkwill. Cross-Cultural Similarities and Differences, Handbook of Music and Emotion: Theory, Research, Applications, Oxford (2010), DOI: 1093/acprof:oso/9780199230143.003.0027

- Joshua Conrad Jackson, Joseph Watts, Teague R. Henry, Johann-Mattis List, Robert Forkel, Peter J. Mucha, Simon J. Greenhill, Russell D. Gray, and Kristen A. Lindquist. Emotion semantics show both cultural variation and universal structure. Science 366.6472 (2019): 1517-1522. DOI: 10.1126/science.aaw8160

- Petri Laukka, and Hillary Anger Elfenbein. Cross-cultural emotion recognition and in-group advantage in vocal expression: A meta-analysis. Emotion Review 13.1 (2021): 3-11. DOI: 10.1177/1754073919897295

- Hillary Anger Elfenbein, and Nalini Ambady. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychological bulletin 128.2 (2002): 203. DOI: 10.1037/0033-2909.128.2.203

- G. Özkarar-Gradwohl, J. Panksepp, F. J. İçöz, H. Çetinkaya, F. Köksal, K. L. Davis, and N. Scherler. The influence of culture on basic affective systems: the comparison of Turkish and American norms on the affective neuroscience personality scales. Culture and Brain 2.2 (2014): 173-192. DOI: 10.1007/s40167-014-0021-9

- G. Özkarar-Gradwohl. Cross-cultural affective neuroscience. Frontiers in psychology 10 (2019): 794. DOI: 10.3389/fpsyg.2019.00794

- G. Özkarar-Gradwohl, K. Narita, C. Montag, J. Panksepp, K. L. Davis, M. Yama, and H. R. Scherler. Cross-cultural affective neuroscience personality comparisons of Japan, Turkey and Germany. Culture and Brain 8, no. 1 (2020): 70-95. DOI: 10.1007/s40167-018-0074-2

- Steven H. Tompson, Sarah T. Huff, Carolyn Yoon, Anthony King, Israel Liberzon, and Shinobu Kitayama. The dopamine D4 receptor gene (DRD4) modulates cultural variation in emotional experience. Culture and Brain 6.2 (2018): 118-129. DOI: 10.1007/s40167-018-0063-5

- Sander Koelstra, Christian Muhl, Mohammad Soleymani, Jong-Seok Lee, Ashkan Yazdani, Touradj Ebrahimi, Thierry Pun, Anton Nijholt, and Ioannis Patras. DEAP: A database for emotion analysis; using physiological signals. IEEE transactions on affective computing 3.1 (2011): 18-31. DOI: 10.1109/T-AFFC.2011.15

- Wei-Long Zheng, Wei Liu, Yifei Lu, Bao-Liang Lu, and Andrzej Cichocki. EmotionMeter: A multimodal framework for recognizing human emotions. IEEE transactions on cybernetics 49.3 (2018): 1110-1122. DOI: 10.1109/TCYB.2018.2797176

- Wei Liu, Jie-Lin Qiu, Wei-Long Zheng, and Bao-Liang Lu. Comparing recognition performance and robustness of multimodal deep learning models for multimodal emotion recognition. IEEE Transactions on Cognitive and Developmental Systems (2021). DOI: 10.1109/TCDS.2021.3071170

- Wei Liu, Wei-Long Zheng, Ziyi Li, Si-Yuan Wu, Lu Gan, Bao-Liang Lu, Identifying similarities and differences in emotion recognition with EEG and eye movements among Chinese, German, and French People, Journal of Neural Engineering, vol. 19 (2022) 026012. DOI: 10.1088/1741-2552/ac5c8d

- Yifei Lu, Wei-Long Zheng, Binbin Li, and Bao-Liang Lu. Combining eye movements and EEG to enhance emotion recognition. Twenty-Fourth International Joint Conference on Artificial Intelligence. 2015.