RESEARCH

December 2019

Original paper: J. E. O’Doherty*, S. Shokur *, L. E. Medina, M. A. Lebedev, M. A. L. Nicolelis. Creating a neuroprosthesis for active tactile exploration of textures (2019). Proceedings of the National Academy of Sciences. https://doi.org/10.1073/pnas.1908008116

Solaiman Shokur

Sensory neuroprostheses offer the promise of restoring perceptual function to people with impaired sensation [1], [2]. In such devices, diminished sensory modalities (e.g., hearing [3], vision [4], [5], or cutaneous touch [6]–[8]) are reenacted through streams of artificial input to the nervous system, typically using electrical stimulation of nerve fibers in the periphery [9] or neurons in the central nervous system [10]. Restored cutaneous touch, in particular, would be of great benefit for the users of upper-limb prostheses, who place a high priority on the ability to perform functions without the necessity to constantly engage visual attention [11]. This could be achieved through the addition of artificial somatosensory channels to the prosthetic device [1].

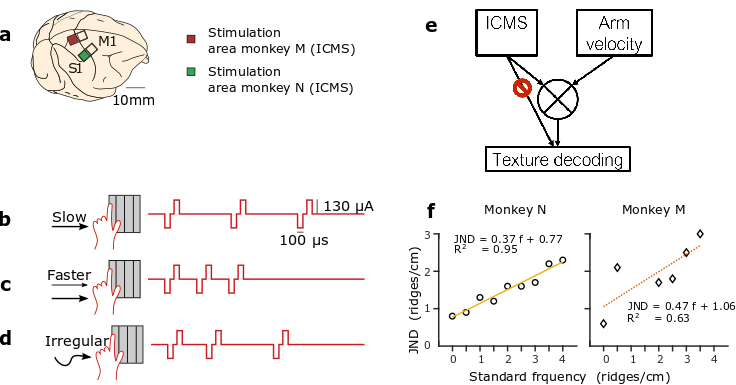

Haptic exploration of objects involves several stereotypic procedures, such as static contact for temperature sensation, holding for weight, enclosure for gross shape, pressure for hardness, contour following for exact shape and lateral fingertip motion for texture [12]. In a new study, published in PNAS in October 2019 [13], we present a neuroprosthetic paradigm that functionally reproduces the sensation of fingertip motion against texture. We hypothesized that intracortical microstimulation (ICMS) pulses generated by exploratory movements over virtual gratings and delivered to the primary somatosensory cortex (Fig. 1a) would allow discrimination of texture coarseness. Crucially, this was the first attempt of such encoding in an active exploration task.

Active exploration of texture

Two rhesus monkeys explored pairs of visually identical objects on a screen using the “fingertip” of an avatar and were rewarded for selecting the object with denser virtual gratings (see video). The gratings consisted of evenly spaced ridges and were completely invisible, so the task could only be performed through a sense of “touch” that was conveyed via patterns of ICMS generated as the avatar’s fingertip moved over the gratings. We encoded the texture using a strategy that was as simple as it was powerful: we delivered a single pulse of ICMS each time the avatar fingertip crossed over a ridge of a grating. This meant that both the specific texture, as well as how it was explored affected the pattern of ICMS pulses.

The monkeys learned to interpret these ICMS patterns, evoked by the interplay of their voluntary movements and the virtual textures of each object. Movements at a constant velocity across a grating with a given texture produced an ICMS pulse train with a constant temporal pulse rate (Fig. 1b). Movements at a faster velocity across the same grating produced a pulse train with a correspondingly higher pulse rate (Fig. 1c). Irregular movements produced temporally varying ICMS pulse trains (Fig. 1d).

(a) Two rhesus monkeys (monkey M and monkey N) were chronically implanted with multielectrode cortical arrays. For a grating with a given spatial frequency, slow scanning (b) would produce a lower ICMS pulse rate than faster scanning (c). Irregular scanning (d) of a grating produced irregular ICMS pulse trains. (e) Schematic of strategy for texture decoding: the ICMS pulse train and the arm velocity should be integrated to discriminate the texture encoding. (f) Just noticeable differences (JNDs) for monkey M (diamonds) and N (circles) as a function of the standard frequency. Linear fits, the corresponding function, and R2 for each graph.

Artificial ICMS texture feedback follows Weber’s law

In one of the first examples of objective measurements of human perception, Ernst Heinrich Weber (1795-1879), described following law: “In comparing objects and observing the distinction between them, we perceive not the difference between the objects, but the ratio of this difference to the magnitude of the objects compared.” This fundamental law of perception is observed for visual, auditive, tactile stimuli and other modalities. In our study, we found that monkeys’ discrimination of texture coarseness, encoded via ICMS, followed Weber’s Law: the just noticeable difference (JND) between frequency pairs increased proportionally to the stimulation frequency (Fig. 1f).

Texture integration

Under some conditions, the interpretation of the texture, necessitated the dynamic integration of ICMS stimulation information with arm proprioception feedback or corollary discharge of motor and sensory cortical regions (Fig. 1e). We found evidence of such integration in one of the two monkeys. We hypothesis that the difference in response between the two monkeys reflects the difference in the simulation areas. Indeed, the stimulation region for monkey N was in the leg area while for monkey M it was in the receptive fields of the same arm used to control the joystick. Therefore, it is possible that interference between feedback from natural somatosensory pathways (hand touching the joystick, proprioception) and S1 ICMS feedback made task performance more difficult for monkey M.

While delivering sensory feedback to an ethologically meaningful cortical area is likely beneficial for the subject to assimilate any limb prosthesis as a natural appendage [14]–[16], the use of different somatosensory regions in the cortex may facilitate the sensory-motor integration and tactile acuity. Therefore, we suggest that it may be necessary to deliver artificial sensory feedback to multiple cortical regions simultaneously to achieve the best performance of such limb prostheses.

Resonating with the sensitive world

During this task, our monkeys’ brain resonated with the virtual objects; similar to what Merleau-Ponty poetically describes in ‘Eye and mind’:

‘Visible and mobile, my body is a thing among things; it is caught in the fabric of the world and its cohesion is that of a thing’ [14]. Hopefully, in the not too distant future, neuroprostheses, equipped with stimulators similar to one we used will permit patients with sensory functions deficiencies to lose themselves in the fabric of the world.

[2] J. L. Collinger, R. A. Gaunt, and A. B. Schwartz, “Progress towards restoring upper limb movement and sensation through intracortical brain-computer interfaces,” Curr. Opin. Biomed. Eng., 2018.

[3] B. S. Wilson, C. C. Finley, D. T. Lawson, R. D. Wolford, D. K. Eddington, and W. M. Rabinowitz, “Better speech recognition with cochlear implants,” Nature, vol. 352, no. 6332, pp. 236–238, 1991.

[4] M. S. Humayun et al., “Visual perception in a blind subject with a chronic microelectronic retinal prosthesis,” Vision Res., vol. 43, no. 24, pp. 2573–2581, 2003.

[5] R. A. Normann, E. M. Maynard, P. J. Rousche, and D. J. Warren, “A neural interface for a cortical vision prosthesis,” Vision Res., vol. 39, no. 15, pp. 2577–2587, 1999.

[6] N. A. Fitzsimmons, W. Drake, and T. L. Hanson, “Primate reaching cued by multichannel spatiotemporal cortical microstimulation,” J. Neurosci., 2007.

[7] R. Romo, A. Hernández, A. Zainos, and E. Salinas, “Somatosensory discrimination based on cortical microstimulation,” Nature, vol. 392, no. March, pp. 387–390, 1998.

[8] D. W. Tan, M. A. Schiefer, M. W. Keith, J. R. Anderson, J. Tyler, and D. J. Tyler, “A neural interface provides long-term stable natural touch perception,” Sci. Transl. Med., 2014.

[9] S. Raspopovic et al., “Restoring natural sensory feedback in real-time bidirectional hand prostheses.,” Sci. Transl. Med., vol. 6, no. 222, p. 222ra19, 2014.

[10] J. E. O’Doherty et al., “Active tactile exploration using a brain–machine–brain interface,” Nature, vol. 479, no. 7372, pp. 228–231, 2011.

[11] D. J. Atkins, D. C. Y. Heard, and W. H. Donovan, “Epidemiologic Overview of Individuals with Upper-Limb Loss and Their Reported Research Priorities.,” J. Prosthetics Orthot., vol. 8, no. 1, p. 2, 1996.

[12] S. J. Lederman and R. L. Klatzky, “Hand movements: A window into haptic object recognition,” Cogn. Psychol., 1987.

[13] J. E. O’Doherty, S. Shokur, L. E. Medina, M. A. Lebedev, and M. A. L. Nicolelis, “Creating a neuroprosthesis for active tactile exploration of textures,” Proc. Natl. Acad. Sci., p. 201908008, 2019.

[14] F. M. Merleau-ponty, T. Primacy, J. M. Edie, and M. Merleau-ponty, “Eye and Mind,” 1964.

Author Biography

Dr. Solaiman Shokur is a Senior Scientist at the Bertarelli Foundation Chair in Translational Neuroengineering in Geneva. Previous work of Solaiman Shokur included the development virtual reality-based brain-machine interface for rhesus monkeys (Ifft et al. 2013) and closed-loop brain-machine interfaces that integrated both motor and tactile functions (O’Doherty et al. 2011; Shokur et al. 2013). From 2015 to 2019, he was the research coordinator at the Alberto Santos Dumont Association for Research Support in Sao Paulo Brazil, where he was in charge of the development of neurorehabilitation tools for patients with spinal cord injury (Shokur et al. 2016; Selfslagh et al. 2019). He also coordinated a long-term training protocol (28 months) integrating non-invasive brain-machine interfaces with visuotactile feedback and locomotion, which demonstrated unprecedented levels of neurological recovery in patients with most severe cases of spinal cord injury (Shokur et al. 2018; Donati et al. 2016).

Dr. Solaiman Shokur is a Senior Scientist at the Bertarelli Foundation Chair in Translational Neuroengineering in Geneva. Previous work of Solaiman Shokur included the development virtual reality-based brain-machine interface for rhesus monkeys (Ifft et al. 2013) and closed-loop brain-machine interfaces that integrated both motor and tactile functions (O’Doherty et al. 2011; Shokur et al. 2013). From 2015 to 2019, he was the research coordinator at the Alberto Santos Dumont Association for Research Support in Sao Paulo Brazil, where he was in charge of the development of neurorehabilitation tools for patients with spinal cord injury (Shokur et al. 2016; Selfslagh et al. 2019). He also coordinated a long-term training protocol (28 months) integrating non-invasive brain-machine interfaces with visuotactile feedback and locomotion, which demonstrated unprecedented levels of neurological recovery in patients with most severe cases of spinal cord injury (Shokur et al. 2018; Donati et al. 2016).