This section includes five key areas of ethical consideration: safety, risk, and well-being; authority, power and coercion; justice and fairness; agency and identity; and surveillance and privacy. These five areas emerged through the development of the IEEE Brain neuroethics framework and capture the main dimensions of potential ethical issues with neurotechnology across the IEEE Brain neuroethics working groups.

Medical neurotechnologies have a wide variety of applications, including preventing, detecting, and eliminating disease and dysfunction, as well as restoring health. However, the meaning of both suffering and health in the neurological context is not self-evident. Is health the prevention, avoidance, or mitigation of neural or psychiatric disease? Is it the maintenance of higher order cognitive structure? Is suffering determined by internal metrics or external observers? Is marked functional impairment a prerequisite for participation in clinical trials, or is a clinical diagnosis using recognized outcome measures sufficient? More generally, proactive approaches to health and wellness — including the use of commercial mobile, wearable, and neurotechnologies—are becoming increasingly recognized as essential to a more sustainable vision of next-general healthcare, based on patient-centric and value-based approaches rather than on the retroactive treatment and management of acute and chronic disease.

Similarly, it is necessary to evaluate how “safety” and “harm” come to be defined across studies, clinical trials, and regulatory bodies. Definitional differences can result in uneven application of ethical frameworks. Safety is defined here as when the benefit of an application outweighs its potential harms. Harm includes negative impacts on the physical body (i.e. pain or infection from implantation), negative impacts on psychological or psychosocial state (i.e. experiences of invasiveness, surveillance, or loss of control), mishandling or misuse of personal data, and misuse of the technology. Further, the uncertainty regarding the predictive value of neural data, both in the prediction of neuropathology and in inferring mental state from observations of the brain, raises additional questions about how neurotechnologies promote health and wellbeing both now and in the future. Such uncertainties seem specifically relevant in regards to the use of neurotechnology on children. Similarly, the uncertainty about how to best define harm when many questions remain about the basic biological structures of the brain and the relation of brain health and environment is also an important consideration.

It is also necessary to reconsider the meaning and experience of invasiveness of neurotechnologies in terms of potential harms. Neurotechnologies interfacing with the brain and spine often require surgical implantation in the body. This could involve full neurosurgery, as in the case of deep brain stimulation (DBS), or implantation in the muscle or under the skin not requiring anaesthetic, as in the case of peripheral nerve stimulation (PNS). Other non-invasive neurotechnologies do not require implantation at all, for example tDCS devices which can be worn. The use of invasive devices may increase the health risks that can be related to damage to the neural tissue from electrode insertion, bio-incompatibility of the materials and connection or disconnection of devices. Risks of invasive devices might come with the advantage of lower side effects due to more focused and selective intervention and more autonomy for the user (i.e. not relying on others to don or doff an extracorporeal device). Engineers, manufacturers, and healthcare providers must pay close attention to acknowledging, reviewing, and balancing the benefits against the health risks related to materials, implementation, and maintenance of physically invasive devices, including risks that might be uncommon. In other words, any organization implementing these devices must adhere to a beneficence (do not harm) principle where the potential benefits outweigh the harms and disclose any health related issues. While in many places, it is required by law to disclose physiological risks, other types of risk–such as changes to impulsiveness associated with deep brain stimulation–are incorporated only as standard practice. Expanding the meaning of risk as articulated in the law to include the areas addressed above could better adhere to the beneficence principle.

Patients and caregivers must be made aware of all risks that accompany the use and implementation of invasive devices, as well as be informed of the uncertainties around these interventions. While current practices focus on the physical invasiveness of such technologies (i.e. implantables are typically considered more invasive than wearables), research suggests that the extent to which technologies disrupt or alter everyday experiences, sometimes called “life invasiveness,” may in fact be a more important consideration for users (Monteleone 2020; Bluhm et al, 2021). While perhaps outside the scope of an engineer’s traditional ethical responsibilities, more consideration needs to be given to the supports that can and should be available to neurotechnology users to attend to not only their physical safety, but their mental and psychosocial wellbeing. Requiring neurotech users to be provided with access to a clinical psychologist with expertise in neurotechnology may not only increase safety, but could potentially improve clinical outcomes long-term. For example, if a user continued to experience side effects after a device was turned off, a modified version of cognitive behavioral therapy (CBT) may be useful to help reverse effects of the technology. Clinical trials and treatments using neurotech could be combined with certain forms of therapy to help reduce potential negative outcomes of using such long-lasting technologies.

Long-Term and Off-Target Impacts: Considering the potential for off-target and long-term side effects and the degree of reversibility of neurotechnologies is also key to ethical development and use. While the term “irreversible” is often used in this context, the relatively short time span covered in current clinical research means that it is not always obvious what changes produced by neurotechnologies are permanent as opposed to long-term. When considering reversibility as a design requirement, it is necessary to not only consider structural changes, but psychological and psychosocial changes as well. Further, balancing the potential for long-term impacts and side effects with potential—but as of yet uncertain—long-term health benefits for individuals with neurological and neuropsychiatric conditions is key in both development and clinical application. For example, one might consider whether it is ethical or not for clinicians to enroll patients in early stages of disease based on unclear long-term health benefits.

Given that some neurotechnologies, even non-invasive wearables, have long-lasting, and maybe irreversible effects, clear safety guidelines around the testing and use of neurotechnology should be implemented during the development phase, as well as incorporating feedback from multiple stakeholders—including users—after production and distribution. The European Union, for example, requires mandatory “post market surveillance” for medical devices, and user experiences, including serious adverse effects, must be included into an annual risk assessment procedure for continuous re-evaluation of the performance and risk of a medical device.

Risk of Off-Label or Dual Use: The irresponsible development and application (misuse) of neurotechnology may occur across all sectors, though it may be more likely in the case of consumer neurotechnologies that are not governed by medical device regulations or subject to the same standards and independent review processes required of human subjects research. Nevertheless, the development of medical neurotechnologies may be considered to have a ‘spillover risk’ resulting from the likelihood of off-label or “dual use” (when referring to use in military applications) ( Ienca et al., 2018 ). The use of robotic exoskeletons for the physical augmentation of military and combat personnel presents one such example, in addition to a number of military applications of brain-computer interfaces that have been actively explored and tested ( Binnendijk et al., 2020 ), such as their use in monitoring the remote pilots of unmanned aerial vehicles (Pham et al., 2020). This issue is explored further in the miliary application. Given the inherent risk of dual or off-label use of neurotechnologies and the limited ability for its original developers to control future applications, the assessment and management of such risks demands a systematic, well-coordinated, community-wide approach, such as the “neurosecurity framework” proposed by Ienca and colleagues (2018) or the Operational Neuroethical Risk Assessment and Mitigation Paradigm (ONRAMP) developed by Giordano (2017).

The definition of “off-label use” is very strict in medical device regulations. Any medical device approval comes with a medical condition in which this particular device can be used. Any application that has not been defined in the process of medical device development and approval belongs to this off-label definition. If Parkinson’s disease has been defined as intended use for a deep brain stimulator, any other medical indication is “off-label”. Therefore, other applications need to be investigated in the course of clinical trials with involvement of ethical committees and legal authorities. Might it be, for example, for generation of scientific knowledge or bringing the device on the market for this new application? Such a medical device is still compliant with the so called “essential requirements” (i.e. the general safety aspects of biocompatibiliy and electrical safety) however, the performance in the “off- label use “ and potential benefits and medical side-effects need to subject of a clinical trial.

Control Studies and Placebo Effects: In pharmaceutical approval studies (double-blind, e.g. neither subject nor doctor knows the choice), pills with no pharmaceutical agent can be given to differentiate between placebo effects and efficacy of the drug. The transfer of this approach into medical devices, especially active implants, needs special care. Clinical studies have to be designed such that the risk of an implantation is well balanced with the benefits of the subject. Therefore, either double-blind studies are not taken, or the implant is not switched on for a certain period of time. However, such a procedure has to be tailored to each and every application, since some diseases have a limited window of opportunity in which such a treatment should start. Stroke therapy would be an example in which the delay of a therapy might have serious effects on the outcome. Specific decisions need to be taken instead of roll-out of general statements to design clinical studies best possible w.r.t. Benevolence and non-maleficience even when lower levels of clinical evidence need to be chosen.

Design Priorities: Clearly aligning anticipated outcomes with user priorities is imperative in the design of medical technologies. Issues of device abandonment, such as lack of consideration of user opinion in selection, poor device performance, or mismatch in user needs and device performance (Phillip and Zhao, 1993) must be carefully attended to, particularly in cases where reversibility of the intervention may be complex or impossible. Involvement of stakeholders in design and application is key (MacDuffie, 2022), given the uncertainty of intervention and ambiguity of terms such as “health” and “harm” in these applications.

Post-trial access: Clinical trials of medical neurotechnologies can be ethically problematic in ways that trials of pharmaceuticals are not. For instance, there are significant risks of infection and hemorrhage associated with stereotactic neurosurgery: an invasive procedure for the implantation and positioning of deep brain stimulation (DBS) electrodes. Trial participants also frequently undergo follow-up surgeries for device removal, maintenance (e.g., battery replacement), and repair (e.g., lead adjustment). Because of these risks, enrolment criteria is typically restricted to only the most treatment refractory patients who have demonstrated resistance to all available therapies. As a result, it has also been argued that the ‘last resort’ status of neurosurgical interventions may lead to participants to form unrealistic expectations about the likelihood of benefit (Stevens & Gilbert, 2021). These devices are also precipitously expensive, with surgical costs for DBS costing over 65,000 USD and replacement batteries at least an additional 10,000 – 20,000 USD ( Stroupe et al., 2014 ). Certain invasive neuromodulation techniques have also been found to induce unexpected side effects affecting participants’ identity, personality, and sense of self, raising questions about the ultimate justification for these trials, given the uncertainty of clinical benefit and significant surgical and long-term risks.

In addition to ethical issues arising before (e.g., informed consent) and during (e.g., stimulation-induced personality change) clinical trials of neural implants, ethical issues also arise in the post-trial phase (Higgins, Gardner & Carter, 2022). Recent publications have drawn attention to lamentable scenarios where participants are abandoned due to sponsors withdrawing investment, requiring them to pay for out-of-pocket for the upkeep of their implants, or else have them removed (Drew, 2020; Strickland & Harris, 2021; Bergstein, 2015). This raises important questions about the responsibilities of trial stakeholders after the end of the trial. For instance, is there an ethical obligation to ensure participants who have personally benefited during the trial receive post-trial access? On the one hand, the Declaration of Helsinki dictates that “sponsors, researchers and host country governments should make provisions for post-trial access for all participants who still need an intervention identified as beneficial in the trial” (World Medical Association, 2013). On the other hand, post-trial access to neural implants entails a substantial commitment of time, money, and clinical resources that may endure for the lifetime of the participant. While investigators are generally supportive of participants being assured of post-trial access (Muñoz et al., 2020; Lázaro-Muñoz et al., 2022), implementing this approach requires extensive collaboration amongst trial stakeholders, and contingency planning prior to the commencement of the trial.

Ensuring that neurotechnologies and the contexts in which they are used expand and support user choice and informed consent is imperative. Considering the uncertainty in outcomes, potential for off-target short-term and long-term effects, and the use of neurotechnologies in the context of neurological or neuropsychiatric conditions, questions of user autonomy and ability to grant truly informed consent arise. Further, attention must be given to situations in which information necessary for consent may be asymmetrically distributed. For example, when a person is consenting to use a technology that relies on algorithmic decision-making, it is possible that neither the user nor the clinician has sufficient understanding of why and how the technology operates to give fully informed consent (Ferretti, Ienca, Rivas Velarde, et al., 2021). Currently accepted informed consent processes in medical settings may not require professionals to share information that may be salient for emerging neurotechnologies, further contributing to these asymmetries (see, for example, Mergenthaler et al, 2021). Additionally, considering cross-cultural and transnational power imbalances is key, as discussed further in section {Social and Cultural}.

How and by whom a healthy or pathological neurological state is determined also requires substantial consideration. For example, adherents to the neurodiversity movement, which embraces conditions such as autism and ADHD as valuable forms of diverse functioning (as opposed to pathology), would define a state of health and wellbeing very differently than a clinician focused on the mitigation or elimination of specific, measurable functional limitations.

Example Authority and Power Questions for Consideration:

- What information is necessary for users to understand the technology enough to provide informed consent? Is that information currently provided in clinical settings?

- Does this technology impact autonomy or restrict user choice?

As with other biomedical technologies, there are concerns about access to neurotechnologies as expensive, intensive, and specialized interventions are not equitably distributed. Some neurotechnologies may not even be available to those with expensive and comprehensive private healthcare policies. In the United States, for example, most private insurers will only cover a small amount of the cost for medical equipment, including prosthetics (between US$2500 and US$5000), disallowing the use of neuroprosthetics for most outside of clinical trials. This raises serious philosophical questions—is it ethical to devote time and funds to the development of prohibitively expensive technology?

Further, gatekeeping procedures for access to interventions need to be interrogated, including understanding pathways to access, potential barriers, or discriminatory structures. In the United States, for example, deep brain stimulation is much more likely to be offered and given to privately insured white men who are financially well-off (Benesh et al., 2017; Chan et al., 2014). Gatekeeping procedures can contribute to widening pre-existing inequalities, as well as greater pressure to use off-label, over-the-counter, or non-therapeutic technologies in order to narrow the gap. Considerations of justice and fairness will change depending on context: for example, who is enrolled in a research study has different ethical dimensions than who has access to a commercially-available device (e.g., Choy, Baker, and Stavropoulos, 2022). Equity in access across the globe must also be considered. Simply examining the impacts of the neurotechnology without addressing the social and political contexts in which it may be deployed fails to adequately grapple with questions of justice (see Wexler and Specker-Sullivan, 2021, for a more in-depth discussion of these issues).

Training and Rehabilitation: It is necessary to consider the length and extent of rehabilitation and device training periods for medical technologies. In addition to the logistical considerations for geographical or economic access to specialists, it is also imperative to consider user and clinical expectations for technology management. For example, a user who consents to an implant without having full knowledge of an extensive device training and adjustment period in advance has not provided fully informed consent.

Replacement and Repair: Technologies that require specialists for replacement and repair should also be carefully considered. Economic and geographic access to specialists, as well as how and for how long a user may need to function without the technology during repairs should all be considered in the design and application.

Example Justice and Fairness Questions for Consideration:

- What barriers or challenges exist to accessing technology and treatment (including post-surgical therapies where appropriate)? Are these barriers inequitably distributed?

- To what extent is the design likely to produce unequal barriers to access such cost or long/specialized training or rehabilitation periods?

- How can users and users’ values, preferences, and priorities be integrated into the design process both individually and as a community?

In addition to the issues of power and authority that may externally impact choice, neurotechnologies may impact an user’s decisional capacity, thus impeding authentic autonomous choices. Autonomy here refers to the ability to freely and independently make decisions and act on them.

Considering that autonomy is articulated as a principle component of informed consent there are also substantial questions regarding whether neurotechnologies threaten or improve autonomy. For example, how does a predictive brain-machine interface (BMI) impact an individual’s ability to make autonomous choices? How do closed-loop devices impact autonomy as the human is removed from the loop? For users enrolled in research, are post-study obligations and impacts sufficiently understood and

communicated? Ultimately, questions of power and autonomy in the development and use of neurotechnologies centrally revolve around if and how the use of these technologies constricts choice and autonomy in users.

The variable (and often poorly defined) meaning of reversibility as discussed in the Safety, Wellbeing, and Risk section raises additional questions of informed consent, and autonomy. The continuum of reversibility includes everything from abandonment of external devices to explantation to simply turning off an implanted device, and each point on this continuum is accompanied by its own set of considerations.

Additionally, there are concerns about the potential impact neurotechnologies may have on personality (the characteristic patterns of thoughts, feelings, and behaviors that make a person unique), identity (who one understands themselves to be), autonomy (whether one can make their own decisions), agency (whether one can act on those decisions) or authenticity (whether one feels that who they are is who they want to be/are meant to be) in ways that are qualitatively different from previous interventions. As the neural basis of consciousness, identity, and volition continue to evolve, these questions will need to continue to be centered in the development and use of neurotechnologies (for an example of empirical work in this space, see Haeusermann, Lechner, Fong, et al, 2023).

There are additional considerations related to agency, when new systems have automatic on/off stimulation algorithms, as well as long-range remote systems to change patient’s stimulation level by clinicians/engineers, which are more susceptible to hacking or malicious interference.

As more neurotechnologies that directly affect behavior are developed, in particular those implantables that seem to bypass the subject control of actions, issues about assigning responsibility are likely to become more pressing. Can an individual be held solely responsible for her actions when they are modulated by a medical implant? Can responsibility be attributed to the devices? The engineers who designed these types of brain implants, or the manufacturers who sell and distribute them? The clinician involved in the programming of the device (if it is an open-loop device that requires programming by a clinician)? Experience from neuropharmacology could be taken as point of departure to develop assessment scenarios and rules for neurotechnologies. While engineers are primarily responsible and liable for the safety of any device and its reliability for its intended use, short and long-term changes in neural correlates and behavior have to be investigated under supervision and responsibility of clinicians and clinical neuroscientists.

Example Agency and Identity Questions for Consideration:

- Does this technology pose any potential threat to user autonomy or agency, either intentionally or unintentionally?

- Is the spectrum of reversibility for this technology clearly understood and articulated?

While some medical devices benefit from constantly collecting data, this also raises concerns about invading the privacy of individuals through monitoring. Pre-existing standards, such as the IEEE Standard for Biometric Privacy (2410-2021), can provide some guidance in terms of privacy, but further consideration is required.

Neural Data: Across the full range of potential applications (both primary and secondary, medical and non-medical), the misuse or mismanagement of user data generated by neurotechnologies (including both neural data as defined by IEEE WG P2794 and other signal types) is an ongoing risk. In large part, this represents an extension of the general problem posed by personal/protected health information of all forms.

Indeed, function (and thus, neural data) in particular is commonly recognized as more central to an individual’s agency, autonomy, identity, and personhood than other forms of biological and health-related data. Moreover, the extent to which any particular type of biodata may be personally revealing increases significantly when multiple modalities of data are collected and analyzed together, along with behavioral, demographic, and clinical health outcome data.

There are additional concerns regarding misuse of this data (Ienca, Haselager, and Emanuel, 2018; Butorac and Carter, 2021). Specific scenarios of neural data misuse include the use of this data for profiling purposes—for instance, by an individual’s present or prospective employer or health insurance provider, to assess and predict the individual’s personal dispositions, behaviors, and health risk profile. Additional issues arise if the data is accepted as more credible than the lived experiences of users. Such misuses of neurodata may become even more ethically problematic when arising in the context of power relationships, such as teacher-student or employer-employee relationships.

Privacy: Further, questions of privacy, ownership, and access over neural data raise additional concerns. For example, who owns the data stored in a neural implant? Can the company that manufactures the device have any control regarding the type of data that is shared and with whom? Are medical device manufacturers the owners of the data collected, or can individual users have a claim of ownership and thus have a say on uses beyond what is medically necessary? How to balance the interests of a person

to share his/her personal data versus potential societal benefits and harms? Data and its value must be decoupled from decisions whether a therapy is granted or not. This connects to the point of data authorization and consent, as current informed consent forms and even privacy user agreements are not truly informing users about who makes use of the data and how it is being used, undermining the “informed” aspect of informed consent.

Cybersecurity: Cybersecurity issues and misuse are also important to consider. Related to issues of cybersecurity, how are these issues complicated when brain implants can be working under malicious interference? Malicious interference is heightened when considering novel systems that might allow long-range remote changes to a patient's stimulation (Cabrera and Carter-Johnson, 2021).

An added complication is that it is not settled as to whether neural data is meaningfully different from other forms of medical data (Ienca, Fins, Jox, et al, 2022). A scenario where this could indeed be the case is when a third party uses the neural data to hack a neural controller—a device that controls the neural activity. The implications in the wellbeing of the user and the potential deprivation of agency—given that the neural activity may in turn control certain behaviors—could be a major source of concern not

immediately arising in other forms of data.

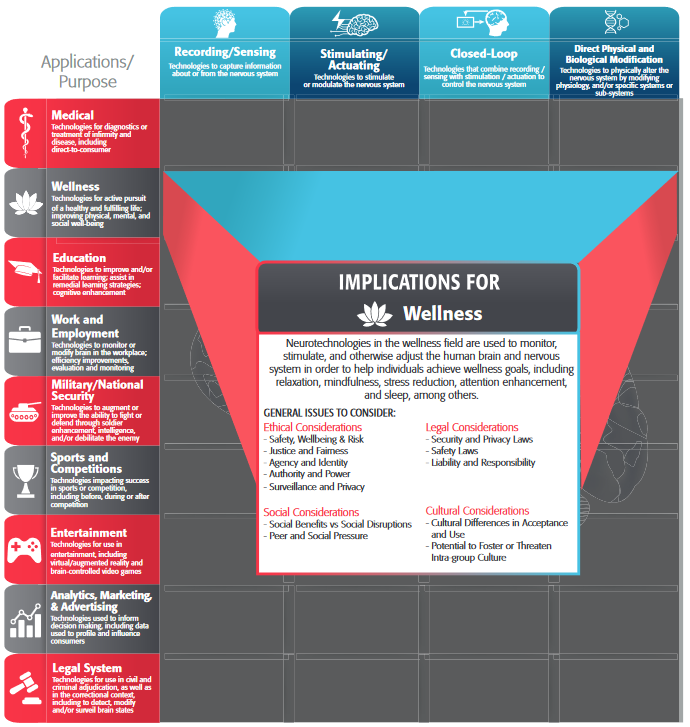

Additionally, monitoring and privacy need to be considered in pathways for the development of neurotechnologies that begin outside of medical contexts. While wellness applications of neurotechnologies are outside the official scope of the medical application and covered elsewhere in the framework {see Wellness Application}, there remains an increasing amount of attention and interest in the potential use of non-medical consumer device data for medical applications.

Such use of consumer device data for health/wellness and medical applications represents a rapidly evolving regulatory landscape, which has been recently recognized by the U.S. Food and Drug Administration (FDA) in an official guidance document on Device Software Functions Including Mobile Medical Application.

Example Surveillance and Privacy Questions for Consideration:

- What is the intended use of collected data? Who has access to it? Who has ownership of it?

- How is user privacy protected? What are possible risks to that privacy?

- How and by whom is data collection for this technology regulated?