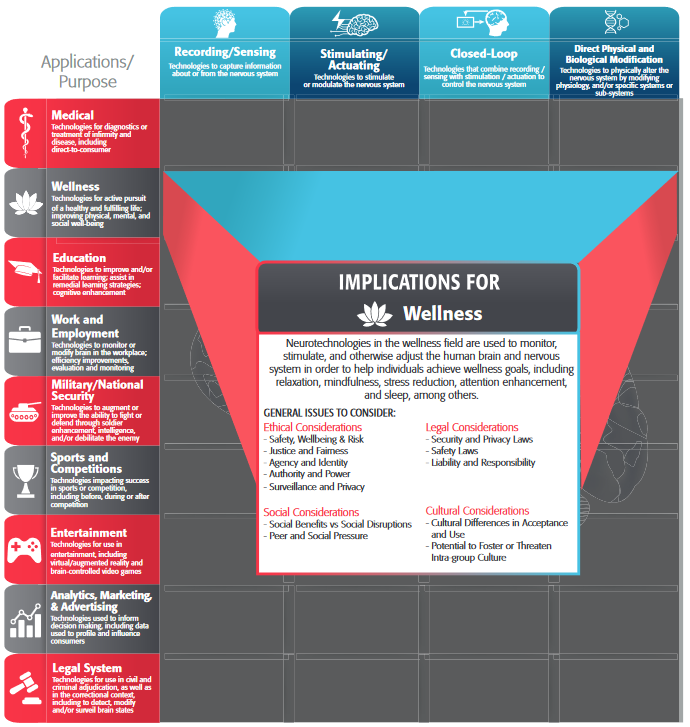

In addition to the general issues outlined above, each category of neurotechnology has ethical, legal, social, and cultural issues that are particularly salient. These are outlined below.

There are multiple issues pertinent to sensing and recording devices, with privacy and data misuse being particularly relevant. These portable implementations have the intention of recording data in real-time and transmitting it to a local or a remote data repository. Since this implementation aims to record data in real-world scenarios, as noted by ECRI (2020, 2021), devices are vulnerable targets of attacks and/or data compromise. Any corruption or loss of data may have serious implications either to delivery of medical care or to the privacy of the user. As part of this process, this information can be correlated with other sources of information and later translated into actions or intentions of the device user. There may be some ethical issues surrounding the treatment, ownership and usage of the information. In addition to the full range of health and safety risks associated with all implantable medical devices, the application of neurotechnologies for medical applications involving recording/sensing functions presents a particular and unique risk to user privacy, identity, and confidentiality. In particular, any neural data recorded from device users has the potential to reveal highly personal, sensitive aspects of cognition and behavior—especially when analyzed in conjunction with other demographic, clinical, psychometric, and/or behavioral data. In general, the looming ELSC hazard is that any sufficiently rich data set becomes personally identifiable by ML/AI algorithms. This applies across all types of biometric and health data, but neural data in particular is likely to be considered more personal and sensitive by the individual, insofar as it may directly reflect/quantify emotional and cognitive states, and aspects of identity.

The scientific reporting of such details is a topic currently being standardized by IEEE Working Group P2794, Reporting Standards for in vivo Neural Interface Research.

Example Questions for Recording/Sensing Medical Neurotechnology:

- What is the known and foreseeable information content of the acquired raw signal? (This will depend both on recording location(s), number of channels, and recording modality.)

- In general, more invasive neural interfaces will generate data with higher resolution (spatial and/or temporal), thus presenting a potentially greater risk

- Which metrics/parameters are extracted from the raw data?

- How much individual variability is there in the collective (neuro)data ‘fingerprint’ collected?

- Which types of data are saved? (Raw data, or processed parameters?)

- How might the sum of this saved data enable to the future extraction of additional information/predictive capabilities?

- Which other biodata types and personal health information are collected in conjunction with the saved neurodata?

Devices intended to modulate or modify neural activity through stimulation face significant challenges that arise from the lack of complete knowledge of the neural processes they interact with. For example, common side effects of deep brain stimulation (DBS) include headaches, confusion, seizures, and stroke. In addition to the acute and chronic safety risks associated with all neuromodulation technologies (e.g. pain, acute or chronic tissue damage, undesirable neuroplastic effects, habituation to stimulation, etc.) and/or implantable devices (infection, biotoxicity, inflammation, etc.), as discussed above, key ELSCI issues to consider include the potential for modulation of the user’s sense of identity, agency, or emotional wellbeing. For instance, provided an accurate enough understanding of the underlying neural circuit, one could in principle design stimulation patterns to fully control the neural dynamics. If the relationship between neural activity and behavior is also well characterized, a properly optimized neural controller could, in theory be used to control behaviors outside the well intended medical applications. This risk applies to closed-loop technologies as well. The question of agency holds especially strong implications in the legal domain, where it forms the basis of the notions of responsibility.

Example Questions for Stimulating/Modulating Medical Neurotechnologies

- To what extent is the use of the technology likely to produce lasting or potentially irreversible changes (or changes perceived as irreversible to the user)?

- To what extent is the user likely to become dependent on the technology for completion of basic activities of daily living — and thus (potentially) for defining their sense of agency and autonomy?

Challenges mentioned above are exacerbated when the neural stimulation is driven by real-time decoding of neural activity (ie. closed-loop). The dynamic interaction between the neural system and neurotechnological device is more difficult to model and describe and its performance is more complex.

As closed-loop neural control systems depend on neural recording/sensing, the above ELSC considerations apply. The imperfection of user intention decoding may cause errant or unintended device/interface outputs. Errors in decoding confer variable risk depending on context. The use of neural recordings as part of the control architecture for an autonomous vehicle, for instance, would likely present unacceptably high risks, given the potentially fatal nature of automotive accidents. By contrast, a neurally-controlled prosthetic arm designed to be able to feed oneself would in most cases present a considerably lower risk, both to the user and to others. While context is a key determinant of reliability requirements of medical devices, reliability of neurotechnology should have high standards for users as even in cases of low risk, the risks to users (both physical and psychological) are important.

For both {Stimulating and Actuating} and {Closed-Loop} Medical Neurotechnologies, issues of efficiency, safety, and traceability are of particular relevance.

Example Questions for Closed-Loop Medical Neurotechnologies:

- How much control does the user have over the device?

- How easy is it for the user to de-activate the device at any moment? How long does deactivation take?

- Does performance rely on adaptive algorithms that learn from user activity? Does performance instead depend on user learning/improvement? To what extent is each true?

- To what extent are the (adaptive) algorithms validated?

- How user-specific are the algorithms? … in less user-specific cases, what is the cost of inaccurate predictions based on predictive models that don’t generalize to the specific user.

Similar to the previous cases, these technologies exist on a spectrum of reversibility, as discussed in {Safety, Risk and Wellbeing section}, which demands a high standard of safety and efficiency.

Example Questions for Direct Physical and Biological Modification Medical Neurotechnologies:

- Is the intervention reversible?

- What are the added risks from biological modification?