Joseph J. Fins, MD, MACP, FRCP is the E. William Davis, Jr. MD Professor of Medical Ethics, Professor of Medicine and Chief of the Division of Medical Ethics at Weill Cornell Medical College, Co-Director of the Consortium for the Advanced Study of Brain Injury (CASBI), Weill Cornell Medicine and Rockefeller University, and The Solomon Center Distinguished Scholar in Medicine, Bioethics and the Law, Yale Law School. Dr. Fins’ published works include “Rights Come to Mind: Brain Injury, Ethics and the Struggle for Consciousness,” Cambridge University Press, 2015. In this interview, Dr. Fins provides his insights on neuroethics, discussing its challenges and benefits and the important role engineering plays in developing technologies and innovative medical devices while also addressing ethical concerns related to patients.

What are your specific areas of interest as they relate to neuroethics?

Joseph J. Fins: I don’t think there’s an overall generic take on what neuroethics is. For instance, there’s a speculative neuroethics that’s concerned about cyborgs, neuro-privacy, enhancement and transhumanism, which isn’t my brand of neuroethics. Then there’s a minority in neuroethics, which is a concern about the clinic and the needs of patients and families touched by neuro-psychiatric illness. That’s the more pragmatic school that I try to advance, and why I’m so delighted to work with engineers through IEEE. Engineers are pragmatic folks trying to make a tangible difference in the world. So, for me, it’s really about designing products and instrumentation that can actually intervene in an injured or ill brain and either make a better diagnosis or make a therapeutic impact.

How did you get involved in this brand of neuroethics?

I got into neuroethics about 20 or so years ago, helping to catalyze a deep brain stimulation (DBS) project, which was a new technology at that time. Working with Weill Cornell neurologist Nicholas Schiff and others, we undertook the first implantation of the deep brain stimulator in the central thalamus of a patient in a minimally conscious state. This patient had been grievously injured, had been unable to talk or eat, and just communicated intermittently and episodically and not reliably with some eye movements. The placement of the deep brain stimulator bilaterally into the intralaminar nucleus of the thalamus led the patient being able to say six- or seven-word sentences, say the first 16 words of the Pledge of Allegiance, and eat by mouth and not a feeding tube for the first time in six years. He had an improved postural tone and could tell his mother he loved her, as well as go shopping and voice a preference about the clothing he wanted to wear. My role was to try to figure out the ethics of doing a phase one clinical trial where we were still in a state of equipoise, where we had therapeutic intent, but we had a patient who could not give his own consent. I had to design the ethical framework to do this work. So, I’ve been involved in a kind of therapeutic and translational neuroethics for about 20 years, and the aforementioned work was published in Nature in 2007. Today, we continued that work with a BRAIN initiative grant doing deep brain stimulation in patients who are able to give consent, who are able to participate in the consent process, having demonstrated proof of principle earlier. I am studying their responses to efforts to restore cognitive function with DBS.

What was the key ethical challenge in advancing that project?

Consent for a more than minimal risk intervention in a subject who could not consent was a real challenge. One of the arguments that I had to advance was that we were really confusing respect for personhood with consent. It’s one thing to do something without consent from a subject who had the capacity to provide consent, or to do something over somebody’s objection when they had the capacity to object. It’s quite another to insist upon consent from a subject who cannot give it when the object of the intervention was to restore their ability to participate in decisions and to regain the ability to have some modicum of assent or consent. In the DBS in MCS study, our work demonstrated that this particular subject regained the ability to assent, not at the level of true decision making for consent, but at a lower level. I called it the regaining of agency ex machina. I wrote about this in my book, Rights Come to Mind: Brain Injury, Ethics and the Struggle for Consciousness. It was a really remarkable thing because through a neuro-prosthetic device designed by engineers, we were able to properly place and modulate a device, calibrate it, and restore voice to a patient who had lost the ability to speak. Through neuroethics, neuroscience, and neuroengineering, we were able to integrate cognitive function and help a subject rejoin the conversation.

Where do you draw the line for such work being more for the patient or more for advancing the technology?

Joseph J. Fins: First of all, we have to distinguish between a subject and a patient. Having said that, the well-being of the individual always has to come first. So there has to be a therapeutic intention there, and the work has to be grounded in a robust scientific hypothesis. Patients are not a means to an end; subjects are not a means to an end. We have this dual obligation to try to advance the science but, most importantly, we have to think of the well-being of the subjects. So, this is really the conflict in research ethics. Because all research has its own risks, because we are in a state of ignorance. We’re in a state of equipoise. We don’t know if it’s going to work or not work. That said, there are ways to mitigate harm and do things incrementally. For example, the intervention that was employed in our DBS in MCS study had already become an established approved therapy for Parkinson’s Disease. In fact, we were already using an established device in a similar region of the brain. For PD it had been approved as being therapeutic because the safety benefit ratio was favorable.

How does your collaboration play out through the IEEE Brain Initiative?

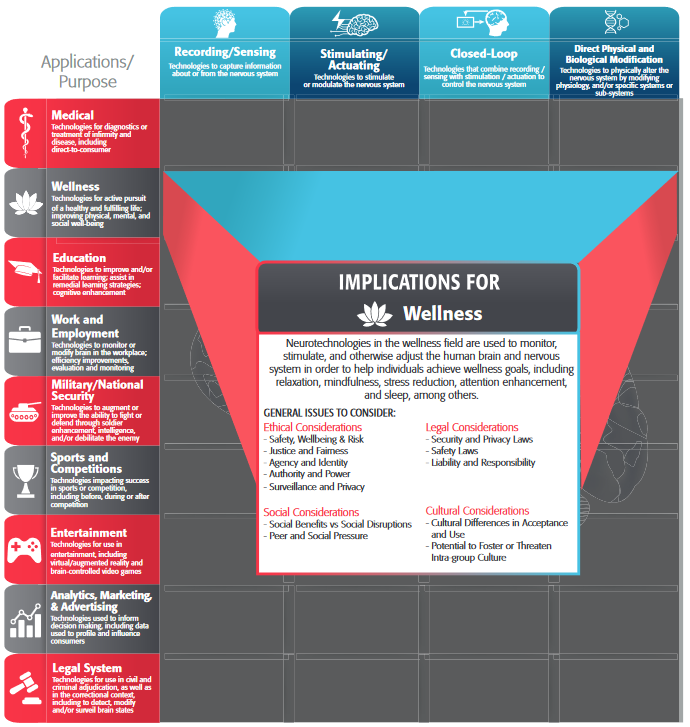

Joseph J.Fins: We had a great meeting in the fall of 2018 where there was a sprinkling of us ethicists interacting with engineers. We were discussing some of the challenges from the bottom up about devices, technology, and other modalities. This was a great approach because so much of bioethics is done top down, where principles are engrafted onto context. Instead, we were really talking about particulars and inductive (vs. deductive) moral reasoning. In this case about the implications of closed-loop devices intervening in the brain. What would that mean for questions like agency, personality, communication, autonomy, choice and responsibility? It wasn’t a hypothetical conversation but was really grounded in what can these devices do now? What might we realistically think they can do in five or ten years? How do we prepare the landscape for that? How do we train future engineers to be cognizant of the broader ethical implications of their work?

One of the issues that I think is really interesting is if you had a device in the brain that made its own decisions, and it was an assistive device, that robbed an individual of their own ability to make autonomous choices, or was an assistive device in aiding with choices, how much responsibility would the individual assume if they made a bad choice? What kind of controls would you want to put in? How would the legal structures accommodate that if somebody made a bad choice? A more common example, if you’re driving an autonomous car, are you still responsible for what happens when you’re driving the car? But now it’s your mind. How do we build in safeguards so that you still maintain dominion over yourself, and that you’re not hijacked by the technology. But, if you have an impairment where you need to be assisted, how do we maximize the assistance without undermining your autonomy? Of course, if we help with your impairment, we’re ultimately giving you more ability to be independent and self-determining. So its a question of balance and for engineers design! So those are some of the issues that are going to have not only a normative or ethical framework to deal with but also present an engineering challenge. We think these are the parameters within which we should operate. Can you guys design that? Can you folks make a device that does that?”

Any final thoughts you would like to share on your collaboration with IEEE Brain?

Joseph J. Fins: I think one of the key things that’s really important is that as we move forward with developing new devices and new technologies as evidenced by the BRAIN Initiative’s motivating document which is really about technology. But as we do so, it’s critically important that we engage the voices of stakeholders, patients, and families who’ve been touched by severe brain injury, disorders of consciousness, psychiatric illness, neurologic illness and the like to find out what works for them. It’s really important to know what kinds of devices are user-friendly and what remediations are desired. There may be choices to consider, such as being able to improve memory at the expense of something else. What’s really important is engaging the people who are going to be the beneficiaries (and bearers of the burdens) of these interventions. We need to have them involved from the beginning in the discussions, so that we don’t make a really cool gizmo that nobody wants to use, or that nobody understands.

I think that this touches on the notion of embodiment. For instance, I am a student of the cello and when you get a new bow, for example, it could be a great bow, but it doesn’t fit my hand. It doesn’t feel like an extension of my arm. It’s a really bad prosthetic device. It may be a beautifully designed bow, perfectly weighted and light, a great bow in someone else’s hands but terrible in mine. So, I think we have to really understand that this notion of embodiment, that these devices become an extension of the corporeal reality of the individual. We have to say, “Does this work for you? What would make it better? How do you want to participate?” We need to realize that they are the end users, and I think the IEEE efforts are helping to establish a broader collaboration, widening the pool of discussants to basic scientists, engineers, ethicists, family members, patients, that will help us design better, more user-friendly devices. And, it would make them, perhaps, more marketable because the people using them would be more likely to buy them or support them or sustain them in a marketplace down the road. Also, fundamentally, it also becomes a question of disability rights too, a topic I have been applying to neuroethics. There is a saying in the disability rights movement, “nothing about us without us.” So its great that IEEE is seeking to expand the conversation beyond the technical so that whatever technological advance is made, it will have the most impact in alleviating burdens imposed by neuropsychiatric injury and illness. I feel privileged to be part of this conversation.