A new paradigm for probabilistic neuromorphic programming

P. Michael Furlong1* and Chris Eliasmith1 1Centre for Theoretical Neuroscience, University of Waterloo, 200 University Ave., Waterloo, N2L 3G1, Ontario, Canada. *Corresponding author(s). E-mail(s): michael.furlong@uwaterloo.ca; Contributing authors: celiasmith@uwaterloo.ca; Keywords: probability, Bayesian modelling, vector symbolic architecture, fractional binding, spatial semantic pointers 1 Introduction Since it was first introduced neuromorphic hardware has held the promise of capturing some of …

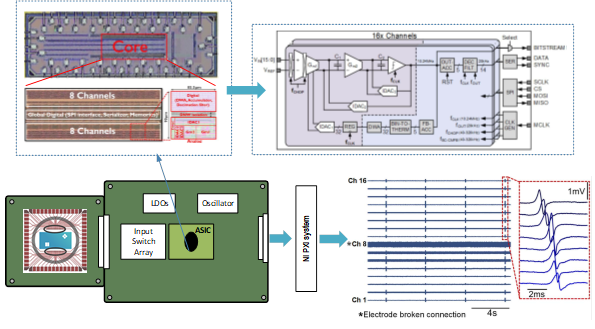

Direct-Digitization Neural Readouts for Fully-Integrated and High-Density Neural Recording

Monitoring large groups of neurons in various brain regions, including superficial and deep structures, is crucial for advancing neuroscience research on cognition, motor control, behavior, among other areas [1], [2]. Current extracellular CMOS high-density neural probes are becoming the new standard in electrophysiology, allowing for simultaneous recording with excellent spatial and temporal resolution [3]–[5]. However, there is still a demand for neural recording technologies that can access a significantly larger number of neurons, allowing for the decoding of more complex motor, sensory, and cognitive tasks. To achieve this, it is necessary to develop neural probes with much higher number of channels, which requires the design of readout circuits that meet several requirements, including: i) area- and power-efficiency, ii) low noise to capture weak neural signals, iii) capability to interface with large-impedance and high-DC-offset electrodes, and iv) tolerance to artifacts caused by movement or concurrent electrical stimulation.

An Interview with the 2022 SSCS-Brain Joint society best paper award winner

In 2022, Prof. Mahsa Shoaran together with Uisub Shin, Laxmeesha Somappa, Cong Ding, Yashwanth Vyza, Bingzhao Zhu, Alix Trouillet, Stéphanie P. Lacour from EPFL won the SSCS-Brain joint society best paper award. In an interview with the winners of the award, the IEEE Brain Magazine discussed more potential impact of their work.

NeuRRAM: RRAM Compute-In-Memory Chip for Efficient, Versatile, and Accurate AI Inference

December 2022

RESEARCH

Weier Wan, Rajkumar Kubendran, Clemens Schaefer, S. Burc Eryilmaz, Wenqiang Zhang, Dabin Wu, Stephen Deiss, Priyanka Raina, He Qian, Bin Gao, Siddharth Joshi, Huaqiang Wu, H.-S. Philip Wong, Gert Cauwenberghs

AI-powered edge devices such as smart wearables, smart home appliances, and smart Internet-of-things (IoT) sensors are already pervasive in our lives. Yet, most of these devices are only smart when they are connected to the internet. Under limited battery capacity and cost budget, local chipsets inside these devices are only capable of relatively simple data processing, while the more computationally demanding AI tasks are offloaded to the remote cloud.

Neuromorphic Model of Human Intelligence

December 2022

RESEARCH

Anna W. Roe

Scientists and engineers have long drawn inspiration from the biological world to understand how architecture gives rise to function. To learn how to fly, study the architecture of bird and insect wings [1]. To build a master swimmer, study the architecture of fish and amphibian neuromuscular oscillators [2]. In the same vein, to understand intelligence, study the architecture of human and nonhuman primate brains. This last endeavor (Neuromorphics or Neuromorphic Computing), has generated ‘smart machines’ that can mimic perception and motor behavior, and have been modelled on the currency of brain function, neuronal spike firing [3,4]. Such approaches have driven the development of new computing architectures that overcome von Neumann bottlenecks, GPUs that accelerate via mass parallelism, in-memory processors, and implementation of attractor networks and finite state machines [5]. Year-by-year, we see accelerations in benchmark performance, expansion of hardware and software technology, and computational deep neural network sophistication [6]. However, despite these breathtaking advances, many of the basic functions of intelligent systems–rapid and efficient memory access, behaviorally targeted resource allocation, on-the-fly response to ever-changing contexts, and energy efficient computation–remain fundamentally out of reach.