RESEARCH

Bleichner M. G., Grzybowski M., Ernst S. M. A., Kollmeier B., Debener S., Denk F.

While you are reading this text, pay attention to the sounds around you. How many different sounds do you notice? Where do they come from? Concentrate on one of them. Is the sound high or low pitched? Now concentrate on a different sound. Does that sound have a specific rhythm?

Apparently, to some degree, you can choose which sounds you hear and which not. Hearing is an active cognitive process, you do not hear with your ears only, but your brain plays a crucial role. It allows you to shift your attention to different sound sources and ignore what is currently not of interest to you. But, your brain is not only important for hearing, hearing is also very important for your brain, and a loss of hearing may have negative effects on your brain.

Hearing loss makes soft sounds inaudible, but more importantly, it can hamper speech communication, particularly in challenging social situations. Consequentially, hearing loss can lead to social isolation and eventually to cognitive decline [1] , effects that can aggravate each other. According to the world health organization, 6 percent of the world’s populations and one-third of people over 65 years suffer from disabling hearing loss, and the numbers are expected to increase over the coming years. Therefore, a better understanding of the interdependence of hearing and the brain becomes an important factor for public health.

Modern hearing devices can greatly improve the quality of life of their users. Their powerful digital signal processing algorithms are capable of elevating sounds from certain directions, filter out unwanted noise, or automatically adjust to the listening situation. However, particularly in acoustically challenging social situations with background noise and many people, current hearing devices often fail to restore hearing to normal hearing level and leave many users unsatisfied. We argue that hearing devices can be improved by taking the users’ listening intentions and their current mental state into account. For this, we work on merging brain-computer interface technology with hearing aid technology.

Electroencephalography (EEG) is a method to “listen” to brain-electrical activity. The brain activity is recorded with electrodes that are placed on the skin of the head. From EEG data recorded under laboratory conditions one can, for instance, derive to which of several sounds the listener is attending to [2]–[5] , whether this person is generally attentive or not, whether the listener perceives two sounds as similar or different, or whether the listener perceives a sound at all. If we would be able to provide this information to the hearing aid, they may adjust better to their users.

For combining brain recordings with hearing aids, we have to record brain activity not only in the laboratory but also beyond the lab in everyday situations. Therefore, the recording systems needs to be transparent, in the sense that neither the person using it nor the people around them notice it [6] . In our setup, we use miniaturized wireless EEG systems that fit into the pocket of your pants, we use off-the-shelf smartphones for stimulus presentation, signal acquisition, and analysis and we use special inconspicuous EEG electrodes. We have developed the cEEGrid (figure 1; www.cEEGrid.com), a flex-printed array of electrodes that is attached unsuspiciously around the ear [7] . The electrodes are positioned around the ear, do not occlude the ear canal and can be combined with any hearing device. With these electrodes, we can monitor EEG activity over extended periods, during an entire workday and even during sleep. We showed in several studies that this setup allows investigating a multitude of different brain processes [3], [8]

Figure 1: Experimental setup. The cEEGrid is attached with an adhesive around the ear. The ear-mold containing the transducers of the hearing device and 3 additional electrodes (black wires) is placed in the outer ear and the concha. Sounds are presented by the hearing device and brain responses are recorded with ear-EEG.

In a recent study [9] we combined this ear-EEG with an experimental hearingdevice (see figure 1) which delivers sounds to the hearing aid user in the mostnatural way [9], [10] . As a proof of principle, we wanted to know whether changesin the acoustic behavior of this hearing device are reflected in the brain activity andwhether these changes can be detected with ear-EEG.

We presented a sequence of four identical sounds to the participants using headphones. The sounds were picked up by the hearing device, which delivered the sounds to the eardrum. For the last sound in the sequence, we sometimes manipulated the hearing device settings, so that a different sound arrived at the eardrum. The brain response (event-related potentials, EPR) to these sounds was recorded using ear-EEG.

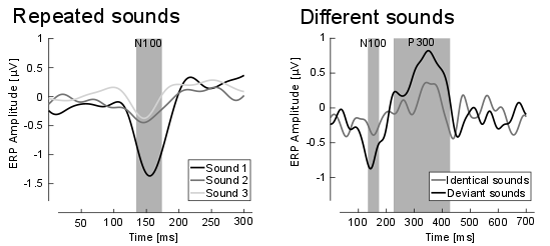

In this paradigm, one expects to find two specific brain activity patterns. First, for identical repeated sounds one expects a much weaker, adapted brain response compared to the first one (see figure 2, left side), i.e. a reduction in the amplitude of the respective ERP. This amplitude reduction is also a sign that the tones were perceived as identical. Second, when a sequence of identical sounds is interrupted by a different sound the brain reacts stronger again (see figure 2, left side), i.e. an amplitude increase for the respective ERPs. This increase in brain activity indicates that the sounds were perceived as different.

Figure 2 Left: Grand average ERP (so-called N100) responses for sound 1 (black), sound 2 (dark grey) and sound 3 (light grey) are shown. Indicated in grey is the time window of interest. A clear reduction in amplitude from stimulus 1 in comparison to stimulus 2 and 3 is apparent. Right: Grand average ERP response for the identical sound (grey line) and the different sound (black line) for the last sound of the sequence. Indicated in grey are the time windows of interest for the N100 and P300 ERP response. A clear difference in amplitude is visible for the time windows of interest.

We found exactly the expected EEG patterns (see figure 2). Already very subtle changes in the acoustic properties of the hearing device where perceived by the participants and were reflected in the brain response. We conclude that it is indeed feasible to combine a hearing device with ear-EEG technology. Despite the restricted positioning and the small distance between the electrodes, brain processes related to auditory perception can be reliably recorded, also while a hearing device in the same ear is active.

In our study, the hardware was mobile but the experiment was still stationary. The next step will be merging hearing aid and EEG recording hardware into one integrated device, and to move out of the lab. This will allow us to study the relationship between brain activity and aided hearing in everyday situations. One of the many challenges that needs to be overcome along the way is real-time EEG artifact attenuation, which is needed to differentiate the tiny brain signals from larger, interfering signals.

Figure 3. In combination with a small EEG amplifier (here not visible) and a smartphone for data recording, the cEEGrid can be used to monitor brain activity over extended periods of time in public without standing and could become an integral part of a hearing device.

The application of ear-centered mobile EEG goes far beyond hearing aids. Recording brain activity in everyday situations without being disturbed by the recording equipment, and without drawing attention from other will be useful for a wide range of applications (see figure 3). It brings EEG close to what many have identified as the digital revolution in health service delivery – mobile Health. We envision applications for therapeutic and diagnostic purposes in individualized medicine, and for various scenarios concerned with workplace safety.

By the way, the sounds you attended to at the beginning. Are they still there?

References

- B. S. Wilson, D. L. Tucci, M. H. Merson, and G. M. O’Donoghue, “Global hearing health care: new findings and perspectives,” Lancet, vol. 390, no. 10111, pp. 2503–2515, 2017.

- J. A. O’Sullivan et al., “Attentional Selection in a Cocktail Party Environment Can Be Decoded from Single-Trial EEG,” Cereb. Cortex, vol. 25, no. 7, pp. 1697–1706, Jul. 2015.

- M. G. Bleichner, B. Mirkovic, and S. Debener, “Identifying auditory attention with ear-EEG: CEEGrid versus high-density cap-EEG comparison,” J. Neural Eng., vol. 13, no. 6, pp. 1–13, 2016.

- B. Mirkovic, S. Debener, M. Jaeger, and M. De Vos, “Decoding the attended speech stream with multi-channel EEG: implications for online, daily-life applications,” J. Neural Eng., vol. 12, no. 4, p. 046007, 2015.

- I. Choi, S. Rajaram, L. A. Varghese, and B. G. Shinn-Cunningham, “Quantifying attentional modulation of auditory-evoked cortical responses from single-trial electroencephalography,” Front. Hum. Neurosci., vol. 7, p. 115, Apr. 2013.

- M. G. Bleichner and S. Debener, “Concealed, Unobtrusive Ear-Centered EEG Acquisition: cEEGrids for Transparent EEG,” Front. Hum. Neurosci., 2017.

- S. Debener, R. Emkes, M. De Vos, and M. Bleichner, “Unobtrusive ambulatory EEG using a smartphone and flexible printed electrodes around the ear,” Sci. Rep., vol. 5, p. 16743, 2015.

- B. Mirkovic, M. G. Bleichner, S. Debener, and M. De Vos, “Speech envelope tracking using around-the-ear EEG,” Cereb. Cortex, vol. 25, no. 7, pp. 1697–1706, 2015.

- F. Denk, M. Grzybowski, S. M. A. Ernst, B. Kollmeier, S. Debener, and M. G. Bleichner, “Event-Related Potentials Measured From In and Around the Ear Electrodes Integrated in a Live Hearing Device for Monitoring Sound Perception,” Trends Hear., vol. 22, p. 233121651878821, 2018.

- F. Denk, M. Hiipakka, B. Kollmeier, and S. M. A. Ernst, “An individualised acoustically transparent earpiece for hearing devices,” Int. J. Audiol., vol. 56, no. S2, pp. 355–363, Mar. 2017.

About the Authors

Martin G. Bleichner studied Cognitive Science at the University of Osnabrück, Germany, where he received his B.Sc. in 2005. He studied Cognitive Neuroscience in Utrecht, The Netherlands, where he received his M.Sc. in 2008. He carried out research work on the first fully implantable brain computer interface at the University Hospital Utrecht and received his PhD in 2014. Since 2013 he works at the neuropsychology lab at the University of Oldenburg, Germany. He works on the development of mobile and ear centered EEG solutions to move EEG acquisition beyond the lab. Besides his research on integrating EEG and hearing devices he uses mobile EEG to study social interaction.

Martin G. Bleichner studied Cognitive Science at the University of Osnabrück, Germany, where he received his B.Sc. in 2005. He studied Cognitive Neuroscience in Utrecht, The Netherlands, where he received his M.Sc. in 2008. He carried out research work on the first fully implantable brain computer interface at the University Hospital Utrecht and received his PhD in 2014. Since 2013 he works at the neuropsychology lab at the University of Oldenburg, Germany. He works on the development of mobile and ear centered EEG solutions to move EEG acquisition beyond the lab. Besides his research on integrating EEG and hearing devices he uses mobile EEG to study social interaction.

Dr. rer. nat. Stephan M.A. Ernst studied Physics at the University of Oldenburg, Germany, focusing on medical physics and audiology. He received his Masters in Physics in 2005 and his PhD in 2008. After three years as postdoc in the Department of Experimental Psychology at the University of Cambridge, UK, he returned to University of Oldenburg, Germany, working at the Cluster of Excellence “Hearing4all”. Since 2016 he is heading the Audiology Group at the University Hospital of Gießen, Germany.

Dr. rer. nat. Stephan M.A. Ernst studied Physics at the University of Oldenburg, Germany, focusing on medical physics and audiology. He received his Masters in Physics in 2005 and his PhD in 2008. After three years as postdoc in the Department of Experimental Psychology at the University of Cambridge, UK, he returned to University of Oldenburg, Germany, working at the Cluster of Excellence “Hearing4all”. Since 2016 he is heading the Audiology Group at the University Hospital of Gießen, Germany.

Marleen Grzybowski studied Hearing Technology and Audiology in Oldenburg, Germany. She received a B.Eng. degree from University of Applied Science Oldenburg and M.Sc. degree from the Carl von Ossietzky University Oldenburg. She joined the Branch Hearing, Speech and Audio Technology at Fraunhofer Institution for Digital Media Technology. Her current research work focus on Investigation of how electrical brain stimulation improves speech understanding.

Marleen Grzybowski studied Hearing Technology and Audiology in Oldenburg, Germany. She received a B.Eng. degree from University of Applied Science Oldenburg and M.Sc. degree from the Carl von Ossietzky University Oldenburg. She joined the Branch Hearing, Speech and Audio Technology at Fraunhofer Institution for Digital Media Technology. Her current research work focus on Investigation of how electrical brain stimulation improves speech understanding.

Stefan Debener, head of the neuropsychology lab at the University of Oldenburg, studied psychology at the Berlin University of technology, Germany. He received his Ph.D. degree from the Dresden University of Technology, Germany in 2001. He was honorary reader, School of Medicine, University of Southampton, United Kingdom and full professor and director of the biomagnetic center, Dept. of Neurology, University Hospital Jena, Germany. Since 2009 he is full professor of neuropsychology, Dept. of Psychology, University of Oldenburg, Germany. He is an expert in the field of mobile EEG and has developed the cEEGrid.

Stefan Debener, head of the neuropsychology lab at the University of Oldenburg, studied psychology at the Berlin University of technology, Germany. He received his Ph.D. degree from the Dresden University of Technology, Germany in 2001. He was honorary reader, School of Medicine, University of Southampton, United Kingdom and full professor and director of the biomagnetic center, Dept. of Neurology, University Hospital Jena, Germany. Since 2009 he is full professor of neuropsychology, Dept. of Psychology, University of Oldenburg, Germany. He is an expert in the field of mobile EEG and has developed the cEEGrid.

Birger Kollmeier, Director of the Department for Medical Physics and Acoustics at the School of Medicine and Health Sciences, Universität Oldenburg, studied physics and medicine at the Universität Göttingen, Germany. He received the Ph.D. degree in physics (supervisor: Prof. Dr. M.R. Schroeder) and the Ph.D. degree in medicine (supervisor Prof. Dr. Dr. Ulrich Eysholdt) in 1986 and 1989, respectively. Since 1993, he has been Full Professor of physics at the Universität Oldenburg, Oldenburg, Germany and head of the Abteilung Medizinische Physik. Since 2012 he is coordinator of the Cluster of Excellence “Hearing4all”. He supervised more than 55 Ph.D. theses and authored and coauthored more than 350 scientific papers in various areas of hearing research, speech processing, auditory neuroscience, and audiology.

Birger Kollmeier, Director of the Department for Medical Physics and Acoustics at the School of Medicine and Health Sciences, Universität Oldenburg, studied physics and medicine at the Universität Göttingen, Germany. He received the Ph.D. degree in physics (supervisor: Prof. Dr. M.R. Schroeder) and the Ph.D. degree in medicine (supervisor Prof. Dr. Dr. Ulrich Eysholdt) in 1986 and 1989, respectively. Since 1993, he has been Full Professor of physics at the Universität Oldenburg, Oldenburg, Germany and head of the Abteilung Medizinische Physik. Since 2012 he is coordinator of the Cluster of Excellence “Hearing4all”. He supervised more than 55 Ph.D. theses and authored and coauthored more than 350 scientific papers in various areas of hearing research, speech processing, auditory neuroscience, and audiology.

Florian Denk studied engineering physics and physics at the University of Oldenburg, Germany, where he received his B.Eng. and M.Sc. degrees in 2014 and 2017, respectively. Since 2017, he is a graduate student working on his Ph.D. in the Medical Physics group at the University of Oldenburg, focussing on research into high-fidelity hearing devices. His main fields of work include acoustics and psychoacoustics related to the external ear, signal processing, and acoustic measurement technology. He also works on the mechanic design of in-ear devices and the integration of mobile earEEG sensors.

Florian Denk studied engineering physics and physics at the University of Oldenburg, Germany, where he received his B.Eng. and M.Sc. degrees in 2014 and 2017, respectively. Since 2017, he is a graduate student working on his Ph.D. in the Medical Physics group at the University of Oldenburg, focussing on research into high-fidelity hearing devices. His main fields of work include acoustics and psychoacoustics related to the external ear, signal processing, and acoustic measurement technology. He also works on the mechanic design of in-ear devices and the integration of mobile earEEG sensors.