RESEARCH

Stephanie Martin

Many people cannot talk or communicate due to various neurological conditions. These people would benefit from a speech device that can decode their inner speech directly from brain activity. However, investigating and decoding inner speech processes has remained a challenging task due to the lack of behavioral output and the difficulty in labeling precisely the content of inner speech. Currently, several brain-computer interfaces have allowed relevant communication, such as moving a cursor on the screen 1 and spelling letters2–4. Although this type of interface has proven to be useful, patients had to learn to modulate their brain activity in an unnatural and unintuitive way – i.e. performing mental tasks like a rotating cube, mental calculus, movement attempts to operate an interface 5, or detecting rapidly presented letters on a screen6. In this article, we describe recent research findings on decoding directly inner speech from electrocorticographic (ECoG) recordings for targeting communication assistive technologies. In addition, we also emphasize various challenges commonly encountered when investigating inner speech, and propose potential solutions in order to get closer to a natural speech assistive device.

Speech has been investigated for many decades and includes various processing steps – such as acoustic processing in the early auditory cortex, phonetic and categorical encoding in posterior areas of the temporal lobe and semantic and higher level of linguistic processes in later stages 7. During inner speech, it remains unclear what speech representation is encoded, and which is the best for targeting communication aid.

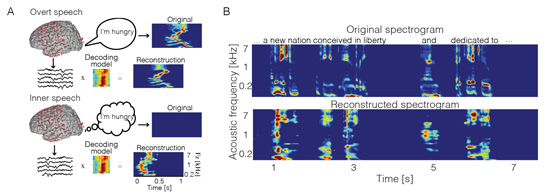

Recently, we decoded acoustic features (i.e. spectrogram) from brain activity recorded during inner speech. For this, we built a linear regression model during overt, continuous speech, and applied the same model to inner speech data. This strategy was employed given the fact that both overt speech and inner speech had been shown to share common neural mechanisms8,8–13. We compared the reconstructed spectrogram of the inner speech condition to the spectrogram of the actual sound produced during overt speech. Using this cross-condition regression framework, we showed that acoustic features are also encoded during inner speech – even in the absence of any perceived or produced sound. We further extended our findings to music imagery. Namely, we showed for the first time that spectrotemporal receptive fields are represented during music imagery, thereby revealing the acoustic tuning properties in the cerebral cortex. In addition, we found robust similarities between music imagery and music perception, and were able to successfully decode the spectrogram content. These findings also demonstrated that decoding models, typically applied in neuroprosthetics for motor and visual restoration, are applicable to auditory imagery.

Figure 1: Inner speech decoding. (A) Decoding Framework. (B) Examples of reconstructed spectrotemporal features from brain activity recorded during inner speech (adapted from 14 with permissions)

Beyond relatively low-level acoustic representation, invariant phonetic information is extracted from a highly variable continuous acoustic signal at a mid-level neural representation15. Recently, ECoG studies have shown that even in the absence of a given phoneme, the neural patterns correlate with those that would have been elicited by the actual speech sound due to top-down, expectation (phonetic masking effect16). From a decoding perspective, several studies have succeeded in classifying individual inner speech units into different categories, such as covertly articulated vowels 17, vowels and consonants during covert word production and intended phonemes 18. These studies represent a proof of concept for basic decoding of individual speech units, but further research is required to define the ability to decode phonemes during continuous, conversational speech.

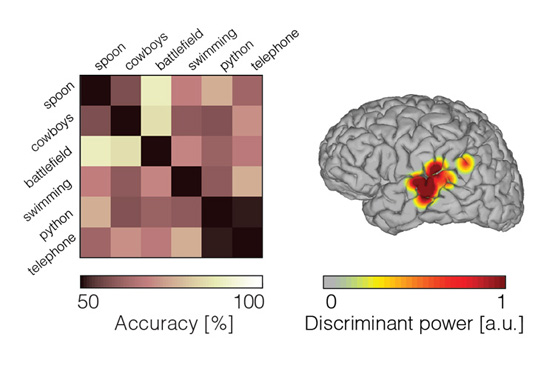

While several studies have demonstrated phoneme classification during inner speech, fewer results are available for word-level classification. Words have been decoded during overt speech from neural signals in the inferior frontal gyrus, superior temporal gyrus and motor areas 14,19,20 21. In recent work, we classified individual words from high frequency activity (70-150Hz) recorded during an inner speech word repetition task 22. To this end, we took advantage of the high temporal resolution offered by ECoG, and classified neural features in the time domain using a support-vector machine model. In order to account for temporal irregularities across trials, we introduced a non-linear time alignment into the classification framework. Results showed that the classification accuracy was significant across five patients. This study represents a proof of concept for basic decoding of speech imagery, and highlights the potential for targeting a speech prosthesis that allows to communicate a few words that are clinically relevant (e.g. hungry, pain, etc).

Figure 2: Word classification during inner speech. Pairwise classification accuracy (random level is 50%; left panel). Discriminant power (right panel).

Neural decoding models provide a promising research tool to derive data driven conclusions underlying complex speech representations, and for uncovering the link between inner speech representations and neural responses. However, although these results reveal a promising avenue for direct decoding of natural speech, they also emphasize that performance is currently insufficient to build a realistic brain-based device. The lack of behavioral output during imagery and inability to monitor the spectrotemporal structure of inner speech represent a major challenge. Critically, inner speech cannot be directly observed by an experimenter. As a consequence, it is complicated to time-lock brain activity to a measurable stimulus or behavioral state, which precludes the use of standard models that assume synchronized input-output data. In addition, natural speech expression is not just operated under conscious control, but is affected by various factors, including gender, emotional state, tempo, pronunciation and dialect, resulting in temporal irregularities (stretching/compressing, onset/offset delays) across repetitions. As a result, this leads to problems in exploiting the temporal resolution of electrocorticography to investigate inner speech. As such challenges are solved, decoding speech directly from neural activity opens the door to new communication interfaces that may allow for more natural speech-like communication in patients with severe communication deficits.

Acknowledgment

This article is adapted from the author’s doctorate thesis: Understanding and decoding imagined speech using intracranial recordings in the human brain. Martin S. 2017, doi:10.5075/epfl-thesis-7740.

References

- Wolpaw, McFarland, D. J., Neat, G. W. & Forneris, C. A. An EEG-based brain-computer interface for cursor control. Electroencephalogr. Clin. Neurophysiol. 78, 252–259 (1991).

- Farwell, L. A. & Donchin, E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523 (1988).

- Vansteensel, M. J. et al. Fully Implanted Brain–Computer Interface in a Locked-In Patient with ALS. N. Engl. J. Med. 375, 2060–2066 (2016).

- Pandarinath, C. et al. High performance communication by people with paralysis using an intracortical brain-computer interface. eLife 6, (2017).

- Millán et al. Asynchronous non-invasive brain-actuated control of an intelligent wheelchair. Conf. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. IEEE Eng. Med. Biol. Soc. Conf. 2009, 3361–3364 (2009).

- Nijboer, F. et al. A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin. Neurophysiol. Off. J. Int. Fed. Clin. Neurophysiol. 119, 1909–1916 (2008).

- Hickok, G. & Poeppel, D. The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402 (2007).

- Palmer, E. D. et al. An Event-Related fMRI Study of Overt and Covert Word Stem Completion. NeuroImage 14, 182–193 (2001).

- Rosen, H. J., Ojemann, J. G., Ollinger, J. M. & Petersen, S. E. Comparison of Brain Activation during Word Retrieval Done Silently and Aloud Using fMRI. Brain Cogn. 42, 201–217 (2000).

- McGuire, P. K. et al. Functional anatomy of inner speech and auditory verbal imagery. Psychol. Med. 26, 29–38 (1996).

- Aleman, A. The Functional Neuroanatomy of Metrical Stress Evaluation of Perceived and Imagined Spoken Words. Cereb. Cortex 15, 221–228 (2004).

- Hinke, R. M.et al. Functional magnetic resonance imaging of Broca’s area during internal speech. Neuroreport 4, 675–678 (1993).

- Geva, Correia & Warburton. Diffusion tensor imaging in the study of language and aphasia. Aphasiology 25, 543–558 (2011).

- Chang et al. Categorical speech representation in human superior temporal gyrus. Nat. Neurosci. 13, 1428–1432 (2010).

- Leonard, M. K., Baud, M. O., Sjerps, M. J. & Chang, E. F. Perceptual restoration of masked speech in human cortex. Nat. Commun. 7, 13619 (2016).

- Ikeda, S. et al. Neural decoding of single vowels during covert articulation using electrocorticography. Front. Hum. Neurosci. 125 (2014). doi:10.3389/fnhum.2014.00125

- Brumberg, Wright, Andreasen, Guenther & Kennedy. Classification of intended phoneme production from chronic intracortical microelectrode recordings in speech-motor cortex. Front. Neurosci. (2011). doi:10.3389/fnins.2011.00065

- Martin, S. et al. Decoding spectrotemporal features of overt and covert speech from the human cortex. Front. Neuroengineering 7, 14 (2014).

- Pasley, B. N. et al. Reconstructing Speech from Human Auditory Cortex. PLoS Biol. 10, e1001251 (2012).

- Tankus, A., Fried, I. & Shoham, S. Structured neuronal encoding and decoding of human speech features. Nat. Commun. 3, 1015 (2012).

- Kellis, S. et al. Decoding spoken words using local field potentials recorded from the cortical surface. J. Neural Eng. 7, 056007 (2010).

- Martin, S. et al. Word pair classification during imagined speech using direct brain recordings. Sci. Rep. 6, 25803 (2016).

- Martin, S. et al. Neural Encoding of Auditory Features during Music Perception and Imagery. Cereb. Cortex 1–12 (2017). doi:10.1093/cercor/bhx277

- Schön, D. et al. Similar cerebral networks in language, music and song perception. NeuroImage 51, 450–461 (2010).

- Callan, D. E. et al. Song and speech: Brain regions involved with perception and covert production. NeuroImage 31, 1327–1342 (2006).

- Zatorre, R. J., Halpern, A. R., Perry, D. W., Meyer, E. & Evans, A. C. Hearing in the Mind’s Ear: A PET Investigation of Musical Imagery and Perception. J. Cogn. Neurosci. 8, 29–46 (1996).

- Griffiths, T. D. Human complex sound analysis. Clin. Sci. Lond. Engl. 1979 96, 231–234 (1999).

- Halpern, A. R. & Zatorre, R. J. When That Tune Runs Through Your Head: A PET Investigation of Auditory Imagery for Familiar Melodies. Cereb. Cortex 9, 697–704 (1999).

- Rauschecker, J. P. Cortical plasticity and music. Ann. N. Y. Acad. Sci. 930, 330–336 (2001).

- Halpern, A. R., Zatorre, R. J., Bouffard, M. & Johnson, J. A. Behavioral and neural correlates of perceived and imagined musical timbre. Neuropsychologia 42, 1281–1292 (2004).

- Kraemer, D. J. M., Macrae, C. N., Green, A. E. & Kelley, W. M. Musical imagery: Sound of silence activates auditory cortex. Nature 434, 158–158 (2005).

- Aertsen, A. M. H. J., Olders, J. H. J. & Johannesma, P. I. M. Spectro-temporal receptive fields of auditory neurons in the grassfrog: III. analysis of the stimulus-event relation for natural stimuli. Biol. Cybern. 39, 195–209 (1981).

- Chi, T., Ru, P. & Shamma, S. A. Multiresolution spectrotemporal analysis of complex sounds. J. Acoust. Soc. Am. 118, 887 (2005).

- Clopton, B. M. & Backoff, P. M. Spectrotemporal receptive fields of neurons in cochlear nucleus of guinea pig. Hear. Res. 52, 329–344 (1991).

- Theunissen, F. E., Sen, K. & Doupe, A. J. Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J. Neurosci. 20, 2315–2331 (2000).